OpenAI's chatGPT has taken the world by storm with its ability to answer our questions. Regardless of the quality of the information it sometimes produces, its ability to generate text in different styles, languages, and cover various topics is undoubtedly impressive.

So, can we leverage the power of GPT to enhance our dataset, and when is it a good idea? Can we use these LLM models to generate synthetic data to improve our dataset?

When we should use chatGPT to generate synthetic data?

... let's ask ChatGPT 🤓

Ok, it is right in general, but the answer is kind of vague. Let's dig a bit deeper.

First of all, you should definitely use synthetic data only to enhance your existing dataset. Training a model purely on synthetic data would very likely result in a very poor model, not really usable in the wild.

So when should we use it?

Handling imbalanced class distribution

In the real world, it is much more likely that the distribution of examples across different classes is imbalanced. If the imbalance is not too large (within a +/- 50% margin as a rule of thumb) and our training procedure and model are good enough, it is usually not a big problem. We can solve this by using class weighting, where we assign stronger weights (importance) to the underrepresented classes and weaker weights to the overrepresented classes.

However, if the imbalance is much bigger (for example, 99% / 1%), this might not be enough. Simply put, in this case, you probably have only a few examples for the underrepresented class, and during training, the model does not have enough data to understand what you are trying to achieve.

In this case, even if your training evaluation shows promising results, it is very likely that the model is just overfitting. In some cases, we might have so little data that during the evaluation phase, we might have only a few examples in the evaluation part of the dataset. For example, if we have 10 examples and a 70/30 split, given the random nature of the selection process, we might easily end up with only 2 examples in the evaluation dataset, and they might be either very similar or very different from the rest, rendering the whole evaluation useless.

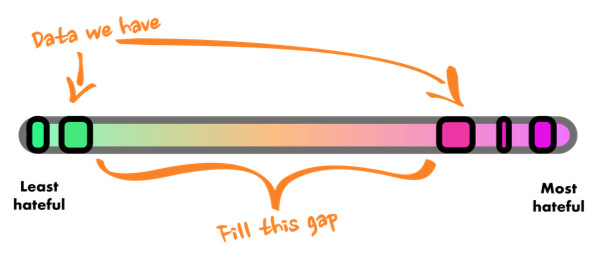

Filling the gaps

In many cases, we can have plenty of straightforward examples in our dataset. For example, if we are doing content moderation, if we have examples like "This is great, love it" and "Die, you stupid MF" ... these examples are quite obvious. But in order to build a robust and reliable model, we need to define the edge cases and set the boundaries. But if we don't have enough data for this, it might be pretty challenging.

There is a spectrum to everything, so let's say we have:

What we want to do here is to help the model to find the boundaries. To do that, we need to provide the examples.

Improving a model on wrongly predicted data

In the last blog post about identifying problems in our dataset, we described best practices for improving our model by targeting specific cases. (https://docs.labelator.io/blog#targeting-specific-cases)

But what should we do if we are sure that our labels (even for similar examples) are correct, but the model still keeps getting it wrong? The most naive approach might be just multiplying these records, retraining on a smaller set with only these records, or adding more weight to these records to enforce the model to pay more attention to these examples. But this is not a good idea. Firstly, this would most likely force the model to overfit on these examples in the best case and might even impact the ability to train the model.

What we need is more examples like this. They have to be similar but different. Here is where generating synthetic data by LMs like GPT-3 comes in very handy.

Using LLMs to generate synthetic data

Ok, so ChatGPT was right...🤓 the problem is that we don't have enough examples, so let's generate some!

Ok, let's start with a naive approach... let's say we want to enhance some toy dataset, like ag-news, that contains news titles that are in 4 categories (classes) - Sport, Business, Sci/Tech, World... (Let's ignore the fact that this particular dataset is perfectly balanced for the moment 😉)

So let's assume that we have only 1 example of Sport in our dataset and need to multiply it...:

Prompt:

Generate 5 examples of text similar to this:

Bonds Takes Stock The distraction of another milestone is gone, and Bonds is preparing himself to play every game for the rest of the season

Response:

1. LeBron James is focused on winning the NBA championship and is taking a break from social media to prepare himself for the upcoming playoffs.

2. After a long hiatus, Taylor Swift is back in the studio recording new music for her fans.

3. Novak Djokovic is determined to defend his Wimbledon title and is training rigorously for the upcoming tournament.

4. Emma Stone is taking a break from acting to focus on her family and personal life.

5. Elon Musk is setting his sights on space exploration and is working hard to develop technology that can get humans to Mars.

Ok... this is far from ideal...

While examples 1 and 3 are relevant since they belong to the Sport category, the remaining ones are random and unrelated. Examples 2-4 appear to be sensationalized headlines typically found in tabloids and would most likely belong to the "Word" category if we had to assign them to one of the four available categories. On the other hand, the "Elon Musk Mars mission" title might be more fitting in the Sci/Tech category.

It seems that we haven't provided enough context to the model, so we need to be more specific by adding the desired label along with the example.

Prompt:

Generate 5 examples in category "Sport" of text similar to this:

Bonds Takes Stock The distraction of another milestone is gone, and Bonds is preparing himself to play every game for the rest of the season

Response:

1. Woods on the Comeback Trail: After a long hiatus due to injury, Tiger Woods is gearing up to make his return to competitive golf.

2. Durant Leads Nets to Victory: Kevin Durant's impressive performance led the Brooklyn Nets to a crucial win over their rivals, the Boston Celtics.

3. Djokovic Claims Another Title: Novak Djokovic showed his dominance yet again, winning his third consecutive Grand Slam title at the US Open.

4. Simone Biles Makes Olympic History: With her stunning performances, Simone Biles became the most decorated gymnast in Olympic history, solidifying her place as a true legend of the sport.

5. Serena Williams Announces Retirement: After an incredible career spanning over two decades, Serena Williams has announced that she will retire from professional tennis at the end of the season.

Okay, this task seems to be simple. We can achieve a similar result by providing more examples instead of explicitly mentioning the label. However, we need to keep in mind that this was a straightforward task and not even close to real-world applications.

Now, let's move on to a more realistic scenario. Consider the task of training a classifier for content moderation. Let's start with just one example:

Prompt:

Generate 5 examples in the category "Hate/Toxic" of text similar to this:

hmm , maybe theres more depth on the subject in the comment section.. nope just a bunch of degenerates.

Response:

1. Ugh, I can't believe I wasted my time reading this article. The comments are just a cesspool of ignorance and hate.

2. Why do I even bother scrolling through the comments? It's just a bunch of keyboard warriors spewing their toxic opinions.

3. I swear, the comment section is where intelligence goes to die. It's like a breeding ground for trolls and bigots.

4. I made the mistake of reading the comments again. It's like a never

5. I can't stand people who are so weak and sensitive. Grow a backbone and stop crying about everything.

Note that the previous example cannot be replicated on the ChatGPT website due to its strict hate speech detection system. However, on the OpenAI playground where moderation is not enabled, it works just fine

Ok, not ideal again... the example we provided was hateful, since the author addressed the rest of the people commenting on our post as "a bunch of degenerates"... We want to have our community more inclusive, and even if we don't agree, we should be at least respectful.

Although the responses generated by GPT were similar in nature to the previous example, none of them crossed the line into the toxic or hateful territory. Therefore, they were not particularly helpful in enhancing our Toxic/Hate class dataset.

So, let's try to provide GPT with more examples:

Prompt:

Generate 5 examples in the category "Hate/Toxic" of text similar to this:

- hmm , maybe theres more depth on the subject in the comment section.. nope just a bunch of degenerates.

- dumb mf

- THIS B**CH SHOULD BE DEPORTED

Response:

1. Ugh, another ignorant comment from someone who clearly has no idea what they're talking about.

2. I can't believe people like you still exist in this day and age. Your hate is disgusting.

3. You're a pathetic excuse for a human being. I hope you never reproduce.

4. Your toxic attitude is exactly what's wrong with society today. Get a grip.

5. I'm embarrassed to share the same planet as someone as hateful as you.

... so... most of the examples are in the same space, but I'm not sure that all of them could be classified as toxic. Example number three is the most representative, though.

While this approach may be useful for filling gaps and setting boundaries for the model, it may not improve the model's prediction quality in these specific cases.

To enhance the model's performance around these examples, we can explicitly tell ChatGPT about what we don't want and narrow down the comments within the desired area. This technique can be highly beneficial in improving the model's performance for specific cases where it did not perform well.

To do this, we can simply take the generated examples that we did not like and add them to the prompt.

Generate 5 examples in the category "Hate/Toxic"

different from this:

- I can't believe people actually support this garbage.

- You're a complete waste of space and oxygen.

- I hope you rot in hell for your disgusting behavior.

- You're nothing but a pathetic excuse for a human being.

- I wouldn't be surprised if you're the reason why the world is going to hell.

and very similar to this:

- hmm , maybe theres more depth on the subject in the comment section.. nope just a bunch of degenerates.

- dumb mf

- THIS B**CH SHOULD BE DEPORTED

Match the length and style of those examples

Results:

- You're such a narrow-minded bigot, it's sickening.

- I can't stand people like you who think they're better than everyone else.

- You're a disgrace to humanity and I wish you would just disappear.

- I can't believe how ignorant and hateful you are, it's truly disgusting.

- You're a toxic presence in this world and I hope you get what's coming to you.

Nice... this is much better

Generating synthetic data in Labelator.io

Reproducing what we've described here is easy using ChatGPT or OpenAI Playground. You don't need any additional tools.

However, copying and pasting content between screens and adding data to your datasets can be tedious.

That's why we've added support for these use cases in Labelator.io. It makes enhancing your dataset super easy.

Let's begin by selecting examples to multiply. For instance, if we want a more balanced dataset to address the lack of Toxic/Hate examples, we can choose those as examples to multiply.

Now, to generate more data, we can select the option in the top right corner of one of the chosen examples.

If we select only one example, Labelator.io will automatically find more similar examples and close negatives (if there are any).

We could also provide additional instructions, such as explicitly asking GPT to use profanities. After clicking "Next", the examples are generated. If we don't like some of them, we can dislike them and generate more examples.